Article Sneak Peak

Shadow AI poses unprecedented risks that far exceed traditional shadow IT challenges, requiring immediate attention from enterprise leaders.

• Shadow AI is already widespread:

73% of knowledge workers use AI tools daily, but only 39% of enterprises have formal AI governance policies in place.

• Data exposure risks are severe:

Once sensitive data enters AI systems, it becomes part of training datasets and permanently leaves organizational control.

• Traditional security tools are inadequate:

Current DLP and endpoint monitoring solutions cannot detect or control the majority of AI applications employees use.

• Governance requires a multi-layered approach:

Effective shadow AI management needs role-specific policies, AI-aware monitoring tools, and comprehensive employee education programs.

• Action is urgently needed:

With 8.5% of employee AI prompts containing sensitive data and 485% growth in corporate data fed to AI tools, organizations must implement governance strategies immediately.

The key to success lies in transforming shadow AI from an unmanaged liability into a properly governed organizational asset that drives productivity while maintaining security and compliance.

Introduction

Recent data shows 73% of knowledge workers use AI tools daily, but only 39% of organizations have formal AI governance policies. This disconnect creates a growing challenge called shadow AI—AI applications that run outside official IT and security protocols.

Shadow AI creates more risks than traditional shadow IT does. Tech leaders report that employees adopt AI tools faster than teams can properly evaluate these applications for safety. Data leakage and exposure remain the biggest concerns for nearly two-thirds of decision makers when it comes to these shadow technologies.

Shadow AI includes any artificial intelligence technologies that operate without official oversight. Employees often turn to these tools to improve productivity and explore creative possibilities. Your organization likely deals with this issue already since 91% of people have tried generative AI and 71% use it specifically for work. This piece gets into why shadow AI poses more danger than its predecessor and what drives its quick adoption. You'll also learn how to build a practical governance strategy that protects your enterprise.

The Rise of Shadow AI in the Enterprise

Shadow AI has become a major concern as employees adopt AI technologies without official approval. This trend reshapes how organizations handle technology governance and security.

What is Shadow AI and How it is different from Shadow IT?

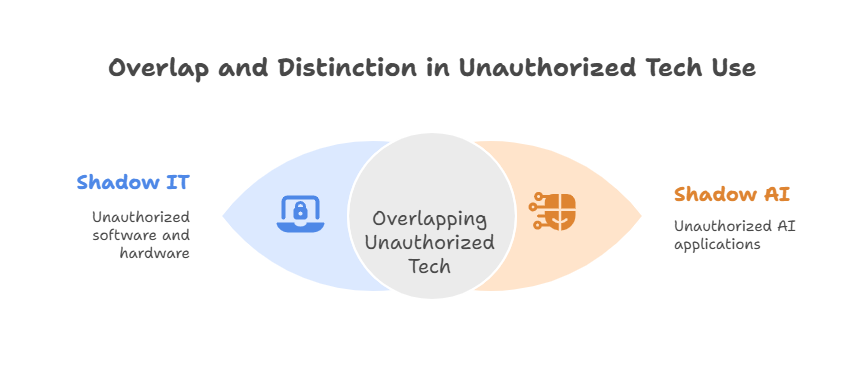

Shadow AI happens when employees use artificial intelligence tools without formal approval from their IT department. Shadow IT covers all unauthorized software or hardware, while shadow AI specifically targets artificial intelligence applications that run outside governance frameworks.

The main difference comes from these tools' nature: shadow AI brings unique concerns about data management, model outputs, and automated decision-making. On top of that, it allows AI models to access information in new ways that could move sensitive company data from internal systems to public domains.

Why Employees are turning to unsanctioned AI tools?

Employees use shadow AI to improve productivity and accelerate processes. They choose these tools when:

- Current solutions are too slow or inadequate

- They need quick automation for repetitive tasks

- They want to push innovation forward without waiting for approval

- Individual business units control 84% of applications instead of IT

These tools are easy to access as SaaS products that don't need installation or IT approval. The simple setup process encourages quick adoption before security teams can spot it.

The Scale of Adoption: stats and trends from recent shadow reports

Shadow AI adoption has grown dramatically. Enterprise generative AI traffic jumped by over 890% between 2023 and 2024. The number of enterprise employees using generative AI applications increased from 74% to 96%.

Recent trends show concerning patterns:

- 38% of employees share sensitive work information with AI tools without permission

- Corporate data usage in AI tools grew by 485% from March 2023 to March 2024

- Sensitive data within these inputs almost tripled from 10.7% to 27.4%

- 73.8% of work-related ChatGPT accounts lack security controls as they're non-corporate

Knowledge workers already use AI tools extensively at work, with 75% adoption rates. Half would keep using them even if explicitly told not to.

Why Shadow AI is More Dangerous Than Shadow IT?

Shadow AI brings risks that are way beyond the reach and influence of traditional shadow IT security concerns. These tools interact with data in unique ways that make the stakes much higher.

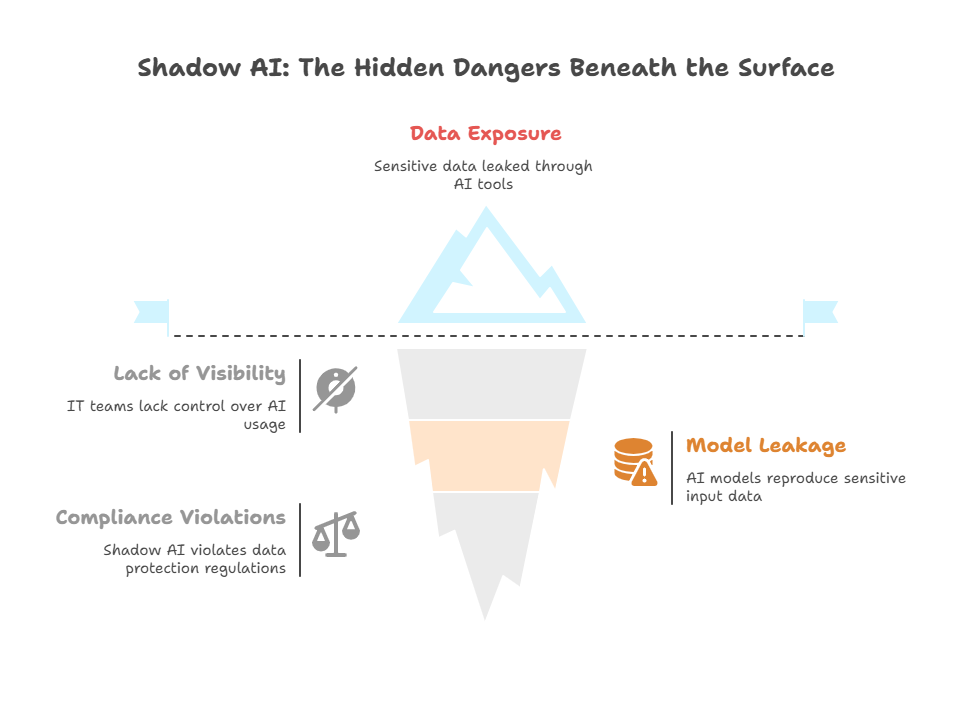

Data exposure risks with Generative AI tools

Recent findings show that 8.5% of what employees ask AI tools has sensitive data. The exposed data has customer information (46%), employee personally identifiable information (27%), and legal or financial details (15%). The biggest problem lies with free-tier AI platforms that make use of information from user queries to train their models - they account for over half (54%) of these leaks.

Once sensitive information enters these systems, it becomes part of the model's training dataset and slips away from organizational control. Data moves from secure internal systems to third-party platforms with unclear data policies, creating major exposure risks.

Lack of visibility and control for IT teams

Many employees don't realize their SaaS tools now have AI features, which lets shadow AI operate secretly in organizations. Gartner predicts by 2026, more than 80% of independent software vendors will add generative AI capabilities to their SaaS applications.

Security teams face critical blind spots because they can't track who uses AI tools, what data gets processed, or how it's stored. This lack of visibility makes it nowhere near possible to enforce consistent security policies.

AI Models learning from sensitive inputs

AI systems, especially generative ones, can remember and accidentally reproduce sensitive input data in future responses. This issue, known as "model leakage" or "unwanted memorization," means private information might show up in responses to other users.

One electronics company learned this the hard way. Their employees used ChatGPT to help debug proprietary source code. That same code later appeared in responses to other users. This leak exposed trade secrets and damaged their reputation.

Legal and Compliance blind spots

Shadow AI directly violates compliance with regulations like GDPR, HIPAA, and CCPA. Research shows 57% of organizations lack an AI policy to guide proper AI usage.

Companies might not know about compliance violations until they face legal consequences because they can't see what tools employees use. These blind spots lead to heavy fines, breach of contract issues, and permanent damage to reputation.

Why Traditional IT Policies Are Failing?

Traditional security measures no longer work against shadow AI. This creates major gaps in how companies protect themselves. Security teams never designed these outdated methods to handle today's AI challenges.

Limitations of Current DLP and Endpoint Tools

DLP tools used to be the life-blood of information security. Now they show serious weaknesses in our AI-driven world. These systems depend on static, rule-based methods that security teams must update manually. This leads to too many false alarms and tired security teams. These tools don't understand context well enough to separate normal business activities from potential data theft.

The biggest issue? Standard DLP solutions don't deal very well with unstructured sensitive data. This exact type of data often ends up in generative AI tools. The systems break down as data grows more complex and voluminous.

The Challenge of monitoring Human Behavior

People make everything more complicated. A study shows 97% of IT decision-makers see major risks in shadow AI. Yet 91% of employees notice almost no risk when using these tools. This gap leads to dangerous behavior:

- 60% of employees use unauthorized AI tools more than last year

- 93% input information without getting proper approval

- 32% have entered private client data into AI tools without permission

Shadow AI will keep growing beyond what monitoring systems can track as employees choose speed over security.

The Explosion of AI tools and Shadow technologies

The number of available AI tools has overwhelmed IT governance systems. About 85% of IT leaders say their teams can't evaluate new AI tools fast enough as employees adopt them.

Of course, most shadow AI tools stay hidden since they run as web-based SaaS applications without installation. Network monitoring can't find these tools among regular web traffic. This creates huge blind spots in security.

Current technology governance approaches must change to handle the unique risks that shadow AI brings.

Building a Governance Strategy for Shadow AI

Expert Quote

“AI innovation can’t thrive in the dark. The instant you codify clear, role‑based guardrails—and back them with real‑time visibility—shadow projects lose their allure and productivity leaps forward without compromising security.” — Manish Sharma, Co‑Founder & CRO, Rezolve.ai

Shadow AI continues to spread rapidly in enterprises. CIOs need detailed governance strategies that balance innovation and security. A well-laid-out approach can help turn shadow AI from a liability into a managed asset.

Creating AI usage policies by role and department

Organizations need clear AI governance policies customized for different roles and departments. A working group of board members, executives, and stakeholders should take the lead. The policy must outline acceptable AI use cases and ban practices that risk data exposure or compliance violations. Technical teams might get permission for advanced AI usage. Customer-facing roles need tighter controls on data inputs.

Deploying AI-aware monitoring tools

Specialized monitoring solutions help detect shadow AI usage. Standard tools fail to catch many AI applications. This becomes critical since 85-90% of SaaS is shadow SaaS. Modern AI-aware monitoring platforms let you:

- Track AI usage organization-wide

- Enforce acceptable use policies

- Block sensitive inputs before they reach AI tools

These platforms watch for accuracy issues and alert teams when models act outside set parameters.

Educating employees on safe AI practices

Employee education is a vital part of effective governance. Research shows 57% of organizations lack an AI policy for their employees. A good AI training program teaches staff about AI capabilities and limits. Role-specific training should cover:

- AI security risk identification

- Ethical AI usage guidelines

- Data protection protocols

Teams stay updated on new threats through regular training sessions that promote transparency.

How ITSM platforms like Rezolve.ai can help?

IT Service Management platforms provide complete solutions to manage shadow AI challenges. These tools show AI adoption patterns, simplify approvals, and enforce usage policies. ITSM solutions implement governance controls to ensure ethical AI decisions throughout its lifecycle. They establish accountability and maintain compliance documentation. This transforms shadow AI from an uncontrolled risk into a well-governed asset.

Closing Note

Shadow AI brings a transformation in enterprise risk management that goes beyond traditional shadow IT challenges. This piece shows how AI tools create new data exposure risks when sensitive information flows into third-party platforms. Your data could become part of the model's training dataset once it enters these systems. You lose control of it forever.

The numbers tell a clear story. Knowledge workers use AI tools daily - 73% of them do. Yet only 39% of enterprises have proper governance policies. Your priority should be to create a complete governance strategy. Role-specific policies, AI-aware monitoring tools, and employee education programs should be part of this strategy.

Quick action now shields your organization from compliance violations, data leaks, and damage to reputation. ITSM platforms help manage these challenges effectively. They give you the needed visibility and enforce usage policies automatically.

Shadow AI will keep growing among other AI technologies. Your approach needs to balance innovative technology with proper safeguards. The aim isn't to stop AI adoption. Instead, you should turn it from an unmanaged risk into a well-governed asset that improves productivity while keeping security intact.

Key Takeaways:

- Shadow AI poses greater risks than shadow IT, including serious data leaks.

- 73% of workers use AI tools daily, but most orgs lack governance.

- Traditional IT policies and tools can’t detect or control AI usage.

- Governance must include role-based policies, AI-aware monitoring, and training.

- ITSM platforms like Rezolve.ai help track, manage, and govern shadow AI.

FAQs

Q1. What is shadow AI and how does it differ from shadow IT?

Shadow AI refers to the use of artificial intelligence tools by employees without official approval from IT departments. Unlike shadow IT, which covers any unauthorized software or hardware, shadow AI specifically involves AI applications that can access and process data in unique ways, potentially exposing sensitive information to public domains.

Q2. Why are employees turning to unsanctioned AI tools?

Employees often adopt shadow AI to enhance productivity, automate repetitive tasks, and accelerate innovation. The accessibility of AI tools as SaaS products, combined with the decentralization of purchasing decisions, encourages rapid adoption before security teams can properly assess the risks.

Q3. What are the main risks associated with shadow AI?

The primary risks of shadow AI include data exposure, lack of visibility for IT teams, AI models learning from sensitive inputs, and legal and compliance blind spots. These risks can lead to the unintended sharing of proprietary information, compliance violations, and potential reputational damage.

Q4. Why are traditional IT policies failing to address shadow AI?

Traditional security approaches are ineffective against shadow AI because they rely on static, rule-based methods that can't keep up with the rapid proliferation of AI tools. Additionally, these policies struggle to monitor human behavior and detect web-based SaaS applications that don't require formal installation.

Q5. How can organizations build an effective governance strategy for shadow AI?

To manage shadow AI effectively, organizations should create role-specific AI usage policies, deploy AI-aware monitoring tools, educate employees on safe AI practices, and utilize ITSM platforms. This multi-layered approach helps balance innovation with security, transforming shadow AI from an unmanaged risk into a properly governed organizational asset.

.webp)

.jpg)

.png)

.png)