What Is Vision AI?

Vision AI, short for Computer Vision Artificial Intelligence, refers to the use of machine learning algorithms and AI models that can analyze and interpret visual data such as images, videos, and real-time camera feeds. It gives machines the ability to "see" and make sense of the world visually, similar to how humans use their eyes and brains.

While human vision is powered by a lifetime of sensory input and biological neural networks, Vision AI uses deep learning, specifically convolutional neural networks (CNNs), to mimic this process. It identifies patterns, textures, shapes, and colors within digital imagery, allowing it to detect objects, recognize faces, interpret scenes, and even draw insights.

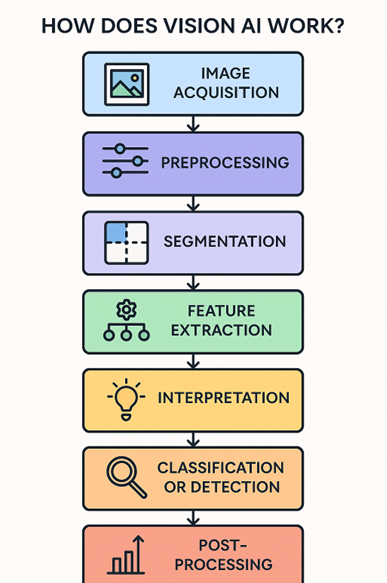

How Does Vision AI Work?

Vision AI systems typically involve several stages, each playing a crucial role in helping machines see and understand the world:

- Image Acquisition

Data is first captured from sources such as smartphone cameras, CCTV feeds, drone footage, or medical imaging equipment. This raw input forms the foundation of all subsequent analysis.

- Preprocessing

Before analysis, images or video frames are cleaned and standardized. This may include resizing, noise reduction, contrast enhancement, and normalization to ensure consistency and reduce variability.

- Segmentation

In this step, the system breaks down the image into meaningful parts or “segments.” For example, in a medical scan, segmentation might isolate a tumor from surrounding tissue; in an autonomous vehicle’s vision, it distinguishes between the road, pedestrians, and traffic signs. Segmentation allows the model to focus on relevant areas within a scene and assign boundaries to objects.

- Feature Extraction

Deep learning models, particularly convolutional neural networks (CNNs), are utilized to extract patterns from segmented image data, including edges, textures, and shapes. These features help the system recognize objects and categorize them accurately.

- Interpretation

Interpretation is where the system makes sense of what it sees, going beyond just identifying objects. It involves understanding context, relationships between objects, or even predicting actions. For instance, in retail surveillance, Vision AI might not just detect a person and a product but also interpret whether an item is being purchased or potentially stolen based on behavioral cues.

- Classification or Detection

With features extracted and interpreted, the system can now identify what it’s looking at. It might classify an object as a “cat,” “traffic light,” or “tumor” or detect its location within the frame using bounding boxes or heat maps. Detection often includes multiple objects and their spatial relationships.

- Post-processing

Finally, the output is refined or visualized. This can involve displaying the results, triggering an automated response (such as a medical alert or robot action), or passing the data to another system for further decision-making and action.

History of Vision AI

The journey of Vision AI began decades ago under the broader umbrella of computer vision. Early systems could perform basic tasks, such as edge detection or shape matching. But it wasn’t until the rise of big data, cloud computing, and deep learning algorithms in the 2010s that Vision AI began its modern renaissance.

A major milestone came in 2012, when a deep neural network called AlexNet significantly outperformed other systems in the ImageNet Large Scale Visual Recognition Challenge, a prestigious AI competition, signaling the rise of deep learning as the leading approach in computer vision. Since then, the field has experienced significant growth, with major tech companies and research institutions investing substantial resources in enhancing image recognition, segmentation, and video analysis capabilities.

Applications of Vision AI

Vision AI is not just an experiment; it’s everywhere, subtly shaping our lives and powering the next wave of digital innovation.

| Vision AI essentially mimics human sight. It does so via deep learning and recognition of images and patterns in real-time

1. Healthcare

Vision AI is revolutionizing diagnostics by enabling machines to detect medical conditions with superhuman accuracy. AI systems can analyze X-rays, CT scans, MRIs, and pathology slides to detect cancer, pneumonia, brain injuries, and diabetic retinopathy.

For example, Google's DeepMind developed a Vision AI model that can diagnose over 50 eye diseases with the same accuracy as expert ophthalmologists. In oncology, AI can identify early-stage tumors that human radiologists might miss.

2. Retail

Brick-and-mortar retail stores are using Vision AI for inventory tracking, shopper analytics, and loss prevention. Systems can automatically track shelf stock, monitor customer movements, and identify shoplifting behaviors without needing human oversight.

Amazon Go stores famously utilize Computer Vision AI and sensors to create a checkout-free shopping experience, enabling customers to walk in, select items, and leave without needing to stop at a checkout counter. The system tracks what’s taken and charges them automatically.

3. Hospitality and Restaurants

Restaurants are now turning to Vision AI to personalize customer service and streamline operations. For instance, Panera Bread has introduced palm recognition technology that enables customers to check in and pay using just their palms. This not only speeds up transactions but also links users to their loyalty accounts, providing a more personalized experience.

Additionally, restaurants are deploying vision-enabled kiosks to recognize repeat customers, track orders, and monitor table cleanliness in real-time. Some fast-food chains use AI to track kitchen workflow and reduce wait times, while others use camera feeds to optimize staff allocation based on foot traffic patterns.

4. Zoos and Wildlife Monitoring

Several modern zoos are implementing Vision AI to monitor animal behavior and ensure their safety. Cameras equipped with AI can track animal movement, detect distress behaviors, and alert staff if something appears abnormal, such as aggression, lethargy, or escape attempts.

For example, Vision AI has been used to detect signs of illness in animals by analyzing gait patterns, posture, and facial expressions. This proactive approach enhances animal welfare while reducing the need for invasive checks, enabling zookeepers to respond quickly if any emergency or unusual event arises.

5. Manufacturing

In factories, Vision AI helps automate quality control by detecting defects in products as they are manufactured. These systems can detect cracks, alignment issues, or irregularities at a microscopic level, reducing human error and minimizing waste.

6. Agriculture

Farmers use Vision AI for precision agriculture—drones equipped with AI-powered cameras scan fields to identify signs of disease, pest infestations, or water stress. This enables targeted interventions and higher yields with fewer resources.

7. Autonomous Vehicles

Self-driving cars are mobile Vision AI platforms. They rely on an array of cameras, lidar, and radar sensors that feed into machine learning systems trained to recognize pedestrians, traffic signs, road conditions, and other vehicles in real-time.

Tesla, Waymo, and other autonomous vehicle companies use Vision AI to process terabytes of image data every hour, allowing cars to make split-second driving decisions.

8. Security and Surveillance

Vision AI is used in facial recognition, object detection, and behavior analysis. Governments and private firms use it in airports, public spaces, and building security systems to identify threats, track suspicious activity, or authenticate identity.

Emerging Trends in Vision AI

Vision AI continues to evolve rapidly, driven by advances in multimodal learning, deployment efficiency, and responsible AI practices. These trends build on foundational architectures and enable broader, more powerful applications across industries.

Multimodal AI Integration

Vision AI is being increasingly integrated into multimodal systems, which are models that can process and relate visual, textual, and auditory inputs. By aligning vision with natural language and sound, these systems gain deeper contextual understanding and offer more intuitive interactions. For example, a multimodal AI might analyze a product image while interpreting a spoken customer query, enabling smarter recommendations or visual question answering. This synergy enhances versatility across real-world applications, from assistive technologies to complex data interpretation tasks.

Edge-Based Real-Time Vision AI

As hardware becomes more powerful and efficient, Vision AI is being deployed directly on edge devices, such as smart cameras, mobile platforms, and IoT sensors. This shift enables real-time visual processing without requiring constant cloud connectivity, thereby reducing latency and enhancing privacy. Edge deployment is particularly valuable in industries requiring immediate decision-making or operation in bandwidth-constrained environments, such as manufacturing, logistics, and safety monitoring.

Explainable and Trustworthy AI

With Vision AI playing an increasingly critical role in high-stakes environments, there is a growing focus on explainability, fairness, and accountability. Organizations are investing in tools that help developers and stakeholders understand how and why a system makes decisions. Visual explanations, such as heat maps or attention maps, enable transparency, while model auditing and bias mitigation strategies support ethical and regulatory compliance. These measures are crucial for building trust with users and ensuring the responsible deployment of these systems.

Synthetic Data Generation

Access to high-quality, labeled image data remains a challenge, especially in specialized or sensitive domains. Synthetic data, artificially generated visual content used for training AI systems, is emerging as a scalable solution. When used in conjunction with real-world datasets, synthetic data can enhance model robustness, expedite development cycles, and fill gaps where data may be scarce or biased. This approach is particularly useful for detecting rare events, analyzing industrial defects, and simulating complex environments.

What’s Next for Vision AI?

Vision AI is steadily becoming a foundational component of modern technology, with its capabilities expanding across industries and environments. As tools become more refined and accessible, the integration of Vision AI into everyday systems - ranging from logistics and customer service to healthcare and agriculture, will continue to grow.

We can expect Vision AI to enhance the functionality of augmented reality (AR) platforms, assist in real-time diagnostics and inspection, and contribute to smarter urban infrastructure. Its ability to provide machines with visual awareness opens the door to more efficient automation, personalized user experiences, and proactive problem-solving in both digital and physical spaces.

As with any powerful technology, responsible design and deployment are key. Vision AI can be a force for meaningful innovation, supporting real-world needs while aligning with human values.

With Vision AI, machines will see the world with enhanced clarity and contribute to building technologies that are more capable, responsive, and aligned with real-world needs.

FAQs

- What is Vision AI used for?

Vision AI is used to analyze images and videos to detect objects, read text, recognize faces, and understand visual patterns. It powers applications like quality control, surveillance, facial recognition, and medical imaging.

- Is Vision AI the same as computer vision?

Vision AI is often used interchangeably with computer vision, but it typically refers to cloud-based or AI-powered services that apply computer vision techniques. Computer vision is the broader field, while Vision AI focuses on practical, AI-driven applications.

- How accurate is Vision AI?

The accuracy of Vision AI depends on the use case, model quality, and training data. In well-defined tasks like object detection or OCR, it can achieve over 90% accuracy, but performance may vary in complex or dynamic environments.

- Can Vision AI work in real time?

Yes, Vision AI can process visual data in real time, enabling instant object detection, motion tracking, and event alerts. This is especially useful in applications like surveillance, autonomous vehicles, and industrial automation.

- What industries benefit most from Vision AI?

Industries such as IT, healthcare, manufacturing, retail, security, and agriculture benefit significantly from Vision AI. It helps automate inspections, monitor safety, enhance customer experiences, and improve operational efficiency through visual insights.

.jpg)

.webp)

.jpg)

.png)

.png)